Like many in the United States I have exceptionally slow home Internet. In fact, until recently my Internet didn’t even qualify as “broadband” as defined by the FCC. Thanks to switching to T-Mobile’s new home Internet I just barely squeak past the minimum 25 Mbps threshold of broadband.

If you’re like me, then you can relate when I say that I have taken great measures to stretch our Internet bandwidth as far as it can go. Most of that is a topic for another day – though I can sum it up by saying that OpenWRT is a wonderful product – what I’d like to talk about today is Docker.

Docker has been an amazing productivity boost since I first started using it in 2014. The ability to rigorously define the operating environment (and by extension dependencies) of an application improves its resiliency. Mostly gone are the days of “it works on my box” – with Docker your “box” is the same as production, every time! Because of this I view Docker containers as disposable – If the app changes, rebuild the container.

All this rebuilding can leave extra “cruft” laying around consuming precious hard disk space. Despite the containers themselves being disposable, tools like Docker Compose retain volumes between execution. Both of these create a problem that can easily be remedied by various Docker purge commands.

I don’t know about you, but I’m pretty darn lazy sometimes, and remembering all the variety of Docker commands, the right order to run them, and actually doing that, is a lot of wasted effort. I certainly have more important tasks to do, and things to remember. So, like any good lazy developer, I just hit that wonderful “Clean/Purge data” button. And, like magic, my Docker environment is once again clean and ready to get back to work.

Except… The next time Docker rebuilds it has to pull images from Docker hub.

Normally this wouldn’t be a problem. And, by “normally”, I mean “if your Internet doesn’t suck, this isn’t a problem”. Most Docker images are relatively small, weighing in at just a few hundred megabytes (and in some cases a lot smaller than that). However, when your Internet is under powered, and over utilized by a family of 6 who’re stuck at home due to COVID-19, this can mean some serious down time in productivity. This is where I got creative and hosted my own Docker proxy.

The setup is pretty simple. On my home server (you have a home server, right??) I pull and configure the Docker register:

The setup here is pretty minimal. The only thing you need to change (versus just copying & pasting the above) is the mounted volume. The path on my machine is “/mnt/simple-storage” – change this to something on your home server. This will be the path that the registery uses to cache any containers you pull.

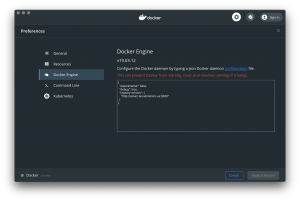

Now, on your workstation, edit your Docker engine configuration. On my Mac, this is accessed by clicking “Preference…” and then “Docker Engine” from the Docker whale icon in the menu bar. In the settings JSON, add the following:

Of course you will need to edit the URL to point to your home server. In my case saturn.lan.whitehorn.us is the DNS name for my home server, yours will probably be an IP address. The final configuration should look like this:

With that complete, you’re finished.

The next time you pull a Docker image from your workstation it will take the same amount of time as normal. This is because your workstations will reach out to your home server to get this image, your home server will not have it and therefore have to fetch it across your (presumably lousy) ISP before caching the result. The next time you pull the same image, however, things are different.

When your workstation asks, if your home server has already cached it, it will reply just as fast as your home LAN can respond – which is pretty darn fast.